Part One of Value-Delivery in the

Rise-and-Decline of General Electric

Illusions of Destiny-Controlled & the World’s Real Losses

By Michael J. Lanning––Copyright © 2021 All Rights Reserved

This is Part One of our four-part series analyzing the role of strategy in GE’s extraordinary rise and dramatic decline. Our earlier post provides an Introductory Overview to this series. (At the end of this Part One, the reader can use a link that is provided to Part Two.)

We contend that businesses now more than ever need strategies focused on profitably delivering superior value to customers. Such strategy requires product-or- service innovation that is: 1) customer-focused; 2) often science-based; and 3) in a multi-business firm, shares powerful synergies.

service innovation that is: 1) customer-focused; 2) often science-based; and 3) in a multi-business firm, shares powerful synergies.

As this Part One discusses, GE in its first century––1878-1980––applied these three strategic principles, producing the great product-innovations that enabled the company’s vast, profitable businesses.

After 1981, however, as discussed later in Parts Two-Four, GE would largely abandon those three principles, eventually leading the company to falter badly. Thus, Part One focuses on GE’s first-century and its use of those three strategic principles because they are the key both to the company’s rise and––by neglect––its decline.

Note from the author: special thanks to colleagues Helmut Meixner and Jim Tyrone for tremendously valuable help on this series.

Customer-focused product-innovation––in GE’s value delivery systems

In GE’s first century, its approach to product-innovation was fundamentally customer-focused. Each business was comprehensively integrated around profitably delivering winning value propositions––superior sets of customer-experiences, including costs. We term a business managed in this way a “value delivery system,” apt description for most GE businesses and associated innovations in that era. Two key examples were the systems developed and expanded by GE, first for electric lighting-and-power (1878-1913), and then––more slowly but also highly innovative––for medical imaging (1913 and 1970-80).

GE’s customer-focused product-innovation in electric lighting-and-power (1878-1913)

From 1878 to 1882, Thomas Edison and team developed the electric lighting-and-power system, GE’s first and probably most important innovation. Their goal was large-scale commercial success––not just an invention––by  replacing the established indoor gas-lighting system.[1] That gas system delivered an acceptable value proposition––a combination of indoor-lighting experiences and cost that users found satisfactory. To succeed, Edison’s electric system needed to profitably deliver a new value proposition that those users would find clearly superior to the gas system.

replacing the established indoor gas-lighting system.[1] That gas system delivered an acceptable value proposition––a combination of indoor-lighting experiences and cost that users found satisfactory. To succeed, Edison’s electric system needed to profitably deliver a new value proposition that those users would find clearly superior to the gas system.

Therefore, in 1878-80, Edison’s team––with especially important contributions by the mathematician/physicist Francis Upton––closely studied that gas system, roughly modeling their new electric lighting-and-power system on it. Replacing gas-plants would be “central station” electricity generators; just as pipes distributed gas under the streets, electrical mains would carry current; replacing gas meters would be electricity meters; and replacing indoor gas-lamps would be electric, “incandescent” lamps. However, rather than developing a collection of independent electric products, Edison and Upton envisioned a single system, its components working together to deliver superior indoor-lighting experiences, including its usage and total cost. It was GE’s first value-delivery-system.

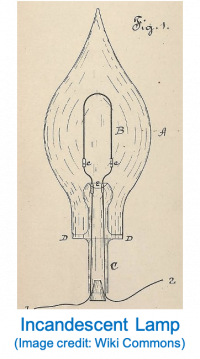

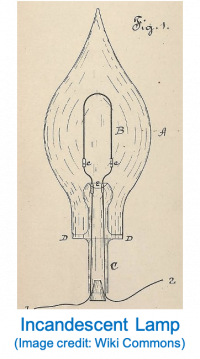

Since 1802 when Humphry Davy had first demonstrated the phenomenon of incandescence––a wire heated by electricity could produce light––researchers had tried to develop a working incandescent lamp, to enable indoor electric lighting.Typically enclosed in a glass bulb, two conductors at the lamp’s base ––connected to a power  source––were attached to a filament (‘B’ in Edison’s 1880 patent drawing at right). As current flowed, the filament provided resistance; the electricity’s energy overcame the resistance, creating heat and incandescence. To prevent the filament’s combustion, the lamp was a (partial) vacuum.

source––were attached to a filament (‘B’ in Edison’s 1880 patent drawing at right). As current flowed, the filament provided resistance; the electricity’s energy overcame the resistance, creating heat and incandescence. To prevent the filament’s combustion, the lamp was a (partial) vacuum.

By 1879, Edison and other researchers expected such electric-lighting, using incandescent lamps, to replace indoor gas-lighting by improving three user-experiences: 1.) lower fire and explosion risks, with minimal shock-risks; 2.) no more “fouling” of the users’ air (gas lights created heat and consumed oxygen); and 3.) higher quality, steadier light (not flickering), with more natural colors.

Yet, a fourth user-experience, lamp-life––determined by filament durability––was a problem. Researchers had developed over twenty lamps, but the best still only had a duration of fourteen hours. Most researchers focused primarily on this one problem. Edison’s team also worked on it, eventually improving lamp durability to over 1200 hours. However, this team uniquely realized that increased durability, while necessary, was insufficient. Another crucial experience was the user’s total cost. Understanding this experience would require analyzing the interactions of the lamps with the electric power.

Of course, market-place success for the electric-lighting system would require that its total costs for users be competitive––close to if not below gas-lighting––and allow the company adequate profit margin. However, the model, using then-common assumptions about incandescent-lamp design, projected totally unaffordable costs for the electric system.

All other developers of incandescent lamps before 1879 used a filament with low resistance. They did so because their key goal was lasting, stable incandescence––and low-resistance increased energy-flow, thus durable incandescence. However, low resistance led to a loss of energy in the conductors, for which developers compensated by increasing the cross-section area of the conductors, and that meant using more copper which was very expensive in large quantities. Citing a 1926 essay attributed to Edison, historian Thomas Hughes quotes Edison on why low-resistance filaments were a major problem:

“In a lighting system the current required to light them in great numbers would necessitate such large copper conductors for mains, etc., that the investment would be prohibitive and absolutely uncommercial. In other words, an apparently remote consideration (the amount of copper used for conductors), was really the commercial crux of the problem.”

Thus, the cost of the electric power would be the key to the user’s total-cost of electric lighting, and that power-cost would be driven most crucially by the lamp’s design. Applying the science of electrical engineering (as discussed in the section on “science-based product-innovation,” later in this Part One), Edison––with key help from Upton–– discovered the most important insight of their project. As Hughes writes in 1979, Edison “…realized that a high-resistance filament would allow him to achieve the energy consumption desired in the lamp and at the same time keep low the level of energy loss in the conductors and economically small the amount of copper in the conductors.” Lamp design was crucial to total cost, not due to the lamp’s manufacturing cost but its impact on the cost of providing electricity to it. What mattered was the whole value-delivery-system.

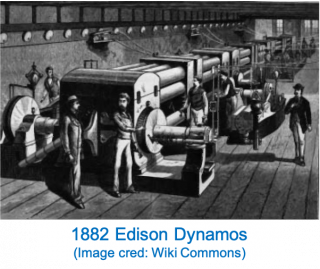

Rethinking generation, Edison found that dynamos––the existing giant generators––were unacceptably inefficient (30-40%). So, he designed one himself with an efficiency rate exceeding ninety percent. Distribution cost was also reduced by Edison’s clever “feeder-and-main” reconfiguration of distribution lines.

Rethinking generation, Edison found that dynamos––the existing giant generators––were unacceptably inefficient (30-40%). So, he designed one himself with an efficiency rate exceeding ninety percent. Distribution cost was also reduced by Edison’s clever “feeder-and-main” reconfiguration of distribution lines.

Success, however, would also require individual lamp-control. Streetlamps, the only pre-Edison lighting, were connected in series—a single circuit, which connected the lamps and turned them all on and off at once; and if one lamp burned out, they all went out. Edison knew that indoor lighting would only be practical if each lamp could be turned on or off independently of the others––as gas lamps had always allowed. So, Edison developed a parallel circuit––current flowed around any lamps turned off and allowed each lamp to be turned on or off individually.

As historian Paul Israel writes, “Edison was able to succeed where others had failed because he understood that developing a successful commercial lamp also required him to develop an entire electrical system.” Thus, Edison had devised an integrated value-delivery-system––all components designed to work together, delivering a unifying value proposition. Users were asked to switch indoor-lighting from gas to electric, in return for superior safety, more comfortable breathing, higher light-quality, and equal individual lamp-control, all at lower cost. The value proposition was delivered by high-resistance filaments, more efficient dynamos, feeder-and-main distribution, and lamps connected in parallel. Seeing this interdependence, Edison writes (in public testimony he gave later), as quoted by Hughes:

It was not only necessary that the lamps should give light and the dynamos generate current, but the lamps must be adapted to the current of the dynamos, and the dynamos must be constructed to give the character of current required by the lamps, and likewise all parts of the system must be constructed with reference to all other parts… The problem then that I undertook to solve was stated generally, the production of the multifarious apparatus, methods, and devices, each adapted for use with every other, and all forming a comprehensive system.

In its first century, GE repeatedly used this strategic model of integration around customer-experiences. However, its electric lighting-and-power system was still incomplete in the late 1880s. Its two components–––first power, then lighting––faced crises soon enough.

In 1886, George Westinghouse introduced high-voltage alternating-current (AC) systems with transformers, enabling long-distance transmission, reaching many more customers. Previously a customer-focused visionary, Edison became internally-driven, resisting AC, unwilling to abandon his direct-current (DC) investment, citing safety––unconvincingly. His bankers recognized AC as inevitable and viewed lagging behind Westinghouse as a growing crisis. They forced Edison to merge with an AC competitor, formally creating GE in 1892 (dropping the name of Edison, who soon left). GE would finally embrace AC.

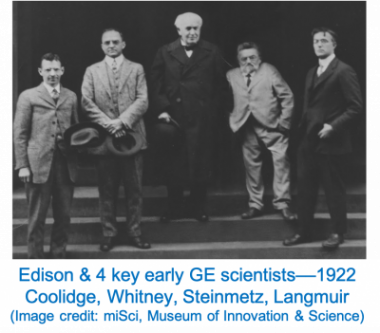

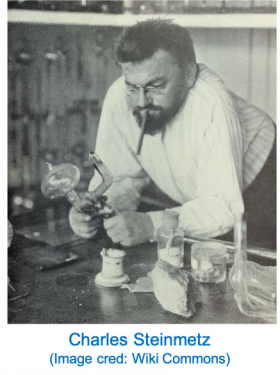

However, AC was more problematic. Its adoption was slowed by a chronic loss of power in AC motors and generators, due to magnetism. This flaw could only be discovered after the device was built and tested. Then, in 1892, brilliant young German American mathematician and electrical engineer Charles Steinmetz published his law of hysteresis, the first mathematical explanation of magnetism in materials. Now that engineers could minimize such losses while devices were still in design, AC’s use became much more feasible.

However, AC was more problematic. Its adoption was slowed by a chronic loss of power in AC motors and generators, due to magnetism. This flaw could only be discovered after the device was built and tested. Then, in 1892, brilliant young German American mathematician and electrical engineer Charles Steinmetz published his law of hysteresis, the first mathematical explanation of magnetism in materials. Now that engineers could minimize such losses while devices were still in design, AC’s use became much more feasible.

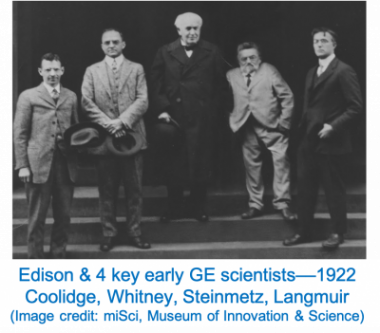

In 1893 Steinmetz joined GE. He understood the importance of AC for making electricity more widely available and helped lead GE’s development of the first commercial three-phase AC power system. It was he who understood its mathematics, and with his leadership it would prevail over Westinghouse’s two-phase system.

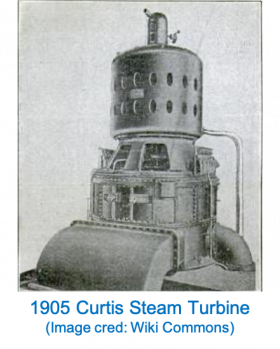

However, the first generators were powered by reciprocal steam engines and engineers knew that AC needed faster-revolving generators. A possible solution was Thomas Parson’s steam turbine which Westinghouse, after buying its US rights in 1895, was improving. Then in 1896, engineer-lawyer Charles Curtis developed and patented a design using aspects of Parsons’ and others.’ In part, his design made better use of steam-energy.

Yet, when he approached GE, some––including Steinmetz––were skeptical. However, GE––with no answer for the imminent Parsons/Westinghouse turbine––stood to lose ground in the crucial AC expansion. Curtis won a meeting with GE technical director Edwin Rice who, along with CEO Charles Coffin, understood the threat and agreed to back Curtis.

Yet, when he approached GE, some––including Steinmetz––were skeptical. However, GE––with no answer for the imminent Parsons/Westinghouse turbine––stood to lose ground in the crucial AC expansion. Curtis won a meeting with GE technical director Edwin Rice who, along with CEO Charles Coffin, understood the threat and agreed to back Curtis.

The project struggled, as Westinghouse installed their first commercial steam turbine in 1901. However, GE persisted, adding brilliant GE engineer William Emmet to the team, which in 1902 developed a new vertical configuration saving space and cost. Finally, in 1903 the Curtis turbine succeeded, at only one-eighth the weight and one-tenth the size of existing turbines, yet the same level of power. With its shorter central-shaft less prone to distortion, and lower cost, GE’s value proposition for AC to power-station customers was now clearly superior to that of Westinghouse. After almost missing the AC transition, GE had now crucially expanded the power component of its core electric value-delivery-system.

As of 1900, GE’s electric lighting business––complement to its electric power business––had been a major success. However, with Edison’s patents expiring by 1898, the lighting business now also faced a crisis. GE’s incandescent lamp filaments in 1900 had low efficiency––about 3 lumens per watt (LPW), no improvement since Edison. Since higher efficiency would provide users with more brightness at the same power and electricity-cost, other inventers worked on this goal, using new metal filaments. GE Labs’ first director Willis Whitney discovered that at extremely high temperatures, carbon filaments would assume metallic properties, increasing filament efficiency to 4 LPW. However, then new German tantalum lamps featured 5 LPW, taking leadership from GE, 1902-11.

Meanwhile, filaments emerged made of tungsten, with a very high melting point and achieving a much-better 8 LPW. GE inexpensively acquired the patent for these––but the filaments were too fragile. As the National Academy of Sciences explains, “The filaments were brittle and could not be bent once formed, so they were referred to as ‘non-ductile’ filaments.” Then, GE lab physicist and electrical engineer William Coolidge, hired by Whitney, developed a process of purifying tungsten oxide to produce filaments that were not brittle at high temperatures.

Meanwhile, filaments emerged made of tungsten, with a very high melting point and achieving a much-better 8 LPW. GE inexpensively acquired the patent for these––but the filaments were too fragile. As the National Academy of Sciences explains, “The filaments were brittle and could not be bent once formed, so they were referred to as ‘non-ductile’ filaments.” Then, GE lab physicist and electrical engineer William Coolidge, hired by Whitney, developed a process of purifying tungsten oxide to produce filaments that were not brittle at high temperatures.

Coolidge’s ductile tungsten was a major success, with efficiency of 10 LPW, and longer durability than competitive metal filaments. Starting in 1910, GE touted the new lamp’s superior efficiency and durability, quickly restoring leadership in the huge incandescent lamp market. This strong position was reinforced in 1913 when another brilliant scientist hired by Whitney, Irving Langmuir, demonstrated doubling the filament’s life span by replacing the lamp’s vacuum with inert gas. The earlier-acquired tungsten-filament patent would protect GE’s lighting position well into the 1930s. Thus, design of the incandescent lamp, and the optimization of the value proposition that users would derive from it, were largely done.

Thus, the company had successfully expanded and completed its electric lighting-and-power value-delivery-system. After initially building that great system, GE had successfully met the AC crisis in the power business, and then the filament-efficiency crisis in lighting. GE had successfully built its stable, key long-term core business.

GE’s customer-focused product-innovation in medical imaging (1913 and 1970-80)

Like its history in lighting-and-power, GE built and later expanded its customer-focused product innovation in medical imaging, profitably delivering sustained superior value.

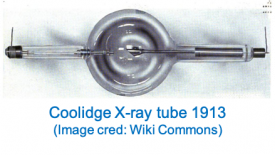

Shortly after X-rays were first discovered in 1895, it was found that they pass through a body’s soft tissue, but not hard tissue such as bones and teeth. It was also soon discovered that such X-ray contact with hard tissue would therefore produce an image on a metal film––similarly to visible light and photography. The medical community immediately recognized X-rays’ revolutionary promise for diagnostic use.

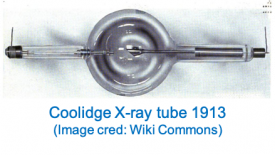

However, X-rays and these images were produced by machines known as X-ray tubes, which before 1913 were erratic and required highly skilled operators. The work of GE’s Coolidge, with help from Langmuir, would  transform these devices, allowing them to deliver their potential value proposition––efficiently enabling accurate, reliable diagnosis by radiology technicians and physicians. We saw earlier that Edison’s team did not merely focus narrowly on inventing a light-bulb––the incandescent lamp––but rather designing the entire value-delivery-system for electric lighting-and-power. Similarly, Coolidge and Langmuir did not narrowly focus solely on producing X-rays, but on understanding and redesigning the whole medical-imaging value-delivery-system that used X-rays to capture the images.

transform these devices, allowing them to deliver their potential value proposition––efficiently enabling accurate, reliable diagnosis by radiology technicians and physicians. We saw earlier that Edison’s team did not merely focus narrowly on inventing a light-bulb––the incandescent lamp––but rather designing the entire value-delivery-system for electric lighting-and-power. Similarly, Coolidge and Langmuir did not narrowly focus solely on producing X-rays, but on understanding and redesigning the whole medical-imaging value-delivery-system that used X-rays to capture the images.

The basis for X-ray tubes was “vacuum tubes.” First developed in the early 1900s, these are glass cylinders from which all or most gas has been removed, leaving an at-least partial vacuum. The tube typically contains at least two “electrodes,” or contacts––a cathode and an anode. By 1912, potentially useful vacuum tubes had been developed but were unpredictable and unreliable.

X-rays are produced by generating and accelerating electrons in a special vacuum-tube. When the cathode is heated, it emits a stream of electrons that collide with the anode. In thus decelerating, most of the electron’s energy is converted to heat, but about one percent of its energy is converted to X-rays. Then, in 1912 Coolidge, who had improved incandescent lamps with ductile tungsten, suggested replacing the platinum electrodes, then used in X-ray tubes, with tungsten. Tungsten’s high atomic number produced higher-energy X-rays, and its high melting point enabled good performance in the tube’s high-heat conditions.

The early X-ray tubes also had a lot of residual gas, used as source of electrons to generate X-rays. In 1913, however, Langmuir showed the value of “high-vacuum” tubes, i.e., with no residual gas––produced with processes  he had used earlier in improving incandescent lamps. Then, he discovered they could get a controlled emission of electrons by using one of Coolidge’s hot tungsten cathodes in a high vacuum. Coolidge quickly put a heated tungsten cathode in an X-ray tube, with a tungsten anode. This “hot cathode, Coolidge Tube” provided the first stable, controllable X-ray generator.

he had used earlier in improving incandescent lamps. Then, he discovered they could get a controlled emission of electrons by using one of Coolidge’s hot tungsten cathodes in a high vacuum. Coolidge quickly put a heated tungsten cathode in an X-ray tube, with a tungsten anode. This “hot cathode, Coolidge Tube” provided the first stable, controllable X-ray generator.

Experiences of users––radiologists––were clearly improved dramatically. As Dr. Paul Frame of Oak Ridge Associated Universities writes:

Without a doubt, the single most important event in the progress of radiology was the invention by William Coolidge in 1913 of what came to be known as the Coolidge x-ray tube. The characteristic features of the Coolidge tube are its high vacuum and its use of a heated filament as the source of electrons… The key advantages of the Coolidge tube are its stability and the fact that the intensity and energy of the x-rays can be controlled independently… The high degree of control over the tube output meant that the early radiologists could do with one Coolidge tube what before had required a stable of finicky cold cathode tubes.

GE’s innovation had transformed the value of using X-ray tubes. The same basic design for X-ray tubes is still used today in medicine and other fields. They had not invented the device but thinking through the most desirable user-experiences and redesigning the X-ray value-delivery-system accordingly, they produced the key innovation.

Expanding its 1913 medical-imaging value-delivery-system, nearly seventy years later (1980), GE successfully developed and introduced the first practical, commercial magnetic-resonance-imaging (MRI) machine. Despite important differences between the 1913 and 1980 innovations, they shared much the same fundamental value proposition––enabling more useful medical diagnosis through interior-body images. Without GE’s long previous experience delivering medical-imaging value, its 1980-83 MRI effort––mostly then seen as a low-odds bet––would have been unlikely. In fact, it proved a brilliant natural extension of GE’s X-ray-based value propositions. As Paul Bottomley––leading member of the GE MRI team––exclaims in 2019, “Oh, how far it’s come! And oh, how tenuous MRI truly was at the beginning!”

MRI scans use strong magnetic fields, radio waves, and computer analysis to produce three-dimensional images of organs and other soft tissue. These are often more diagnostically useful than X-ray, especially for some conditions such as tumors, strokes, and torn or otherwise abnormal tissue. MRI introduced a new form of medical imaging, providing a much higher resolution image, with significantly more detail, and avoiding X-rays’ risk of ionizing radiation. While it entails a tradeoff of discomfort––claustrophobia and noise––the value proposition for many conditions is clearly superior on balance.

However, in 1980 the GE team was betting on unproven, not-yet welcome technology. GE and other medical-imaging providers were dedicated to X-ray technology, seeing no reason to invest in MRI. Nonetheless, the team believed that MRI could greatly expand and improve the diagnostic experience for many patients and their physicians while producing ample commercial rewards for GE.

However, in 1980 the GE team was betting on unproven, not-yet welcome technology. GE and other medical-imaging providers were dedicated to X-ray technology, seeing no reason to invest in MRI. Nonetheless, the team believed that MRI could greatly expand and improve the diagnostic experience for many patients and their physicians while producing ample commercial rewards for GE.

In the 1970s, X-ray technology was advanced via CT scan––computed tomography––which GE licensed and later bought. CT uses combinations of X-ray images, computer-analyzed to assemble virtual three-dimension image “slices” of a body. In the 1970s GE developed faster CT scanning, producing sharper images. The key medical imaging advancement after 1913, however, would be MRI.

The basis for MRI was the physics phenomenon known as nuclear magnetic resonance (NMR), used since the 1930s in chemistry to study the structure of a molecule. Some atoms’ nuclei display specific magnetic properties in the presence of a strong magnetic field. In 1973, two researchers suggested that NMR could construct interior-body images, especially soft tissues––the idea of MRI––later winning them the Nobel Prize.

In the mid-1970s, while earning a Ph.D. in Physics, Bottomley became a proponent of MRI. He also worked on a related technology, MRS––magnetic resonance spectroscopy––but he focused on MRI. Then, in 1980 he interviewed for a job at GE, assuming that GE was interested in his MRI work. However, as he recounts:

I was stunned when they told me that they were not interested in MRI. They said that in their analysis MRI would never compete with X-ray CT which was a major business for GE. Specifically, the GE Medical Systems Division in Milwaukee would not give them any money to support an MRI program. So instead, the Schenectady GE group wanted to build a localized MRS machine to study cardiac metabolism in people.

Bottomley showed them his MRS work and got the job but began pursuing his MRI goal. Group Technology Manager Red Reddington also hired another MRI enthusiast William Edelstein, Aberdeen graduate, who worked with Bottomley to promote an MRI system using a superconducting magnet. The intensity of magnetic field produced by such a magnet––its field strength–––is measured in “T” (Tesla units). GE was then thinking of building an MRS machine with a “0.12 T” magnet. Instead, Bottomley and Edelstein convinced the group to attempt a whole-body MRI system with the highest field-strength magnet they could find. The highest-field quote was 2.0 T from Oxford Instruments who were unsure if they could achieve this unprecedented strength. In Spring of 1982, unable to reach 2.0 T, Oxford finally delivered a 1.5 T––still the standard for most MRI’s today.

By that summer, the 1.5 T MRI produced a series of stunning images, with great resolution and detail. The group elected to present the 1.5 T product, with its recently produced images, at the major radiological industry conference in early 1983. This decision was backed by Reddington and other senior management, including by-then CEO Jack Welch––despite some risk, illustrated by reaction at the conference.

We were totally unprepared for the controversy and attacks on what we’d done. One thorn likely underlying much of the response, was that all of the other manufacturers had committed to much lower field MRI products, operating at 0.35 T or less. We were accused of fabricating results, being corporate stooges and told by some that our work was unscientific. Another sore point was that several luminaries in the field had taken written positions that MRI was not possible or practical at such high fields.

Clearly, however, as the dust settled, the market accepted the GE 1.5T MRI:

Much material on the cons of high field strength (≥0.3 T) can be found in the literature. You can look it up. What is published–right or wrong–lasts forever. You might ask yourself in these modern times in which 1.5 T, 3 T and even 7 T MRI systems are ordinary: What were they thinking? … All of the published material against high field MRI had one positive benefit. We were able to patent MRI systems above a field strength of 0.7 T because it was clearly not “obvious to those skilled in the art,” as the patent office is apt to say.

In 2005, recognizing Edelstein’s achievements, the American Institute of Physics said that MRI “is arguably the most innovative and important medical imaging advance since the advent of the X-ray at the end of the 19th century.” GE has led the global Medical Imaging market since that breakthrough. As Bottomley says, “Today, much of what is done with MRI…would not exist if the glass-ceiling on field-strength had not been broken.”

* * *

Thus, as it had done with its electric lighting-and-power system, GE built and expanded its value-delivery-system for medical-imaging. In 1913, the company recognized the need for a more diagnostically valuable X-ray imaging system––both more stable and efficiently controllable. GE thus innovatively transformed X-ray tubes––enabling much more accurate, reliable, efficient medical diagnosis of patients for physicians and technicians.

Later, the GE MRI team’s bet on MRI in 1980 had looked like a long-shot. However, that effort was essentially an expansion of the earlier X-ray-based value proposition for medical imaging. The equally customer-focused MRI bet would likewise pay off well for patients, physicians, and technicians. For many medical conditions, these customers would experience significantly more accurate, reliable, and safe diagnoses. This MRI bet also paid off for GE, making Medical Imaging one of its largest, most profitable businesses.

Science-based product innovation

We started this Part One post by suggesting that GE applied three strategic principles in its first century––though later largely abandoned––starting with the above-discussed principle of customer-focused product-innovation. Now we turn to the second of these three principles––science-based product innovation.

In developing product-innovations that each helped deliver some superior value proposition, GE repeatedly used scientific knowledge and analysis. This science-based perspective consistently increased the probability of finding solutions that were truly different and superior for users. Most important GE innovations were either non-obvious or not possible without this scientific perspective. Thus, to help realize genuinely different, more valuable user-experiences, and to make delivery of those experiences profitable and proprietary (e.g., patent-protected), GE businesses made central use of science in their approach to product innovation.

This application included physics and chemistry, along with engineering knowledge and skills, supported by the broad use of mathematics and data. It was frequently led by scientists, empowered by GE to play a leading, decision-making role in product innovation. After 1900, building on Edison’s earlier product-development lab, GE led all industry in establishing a formal scientific laboratory. It was intended to advance the company’s knowledge and understanding of the relevant science, but primarily focused on creating practical, new, or significantly improved products.

Throughout GE’s first century, its science-based approach to product innovation was central to its success. After 1980, of course, GE did not abandon science. However, it became less focused on fundamental product-innovation, more seduced by the shorter-term benefits of marginal cost and quality enhancement, and thus retreated from its century-long commitment to science-based product-innovation. Following are some examples of GE’s success using that science-based approach most of its first century.

* * *

Edison was not himself a scientist but knew that his system must be science-based, with technical staff. As Ernest Freeberg writes:

What made him ultimately successful was that he was not a lone inventor, a lone genius, but rather the assembler of the first research and development team, at Menlo Park, N.J.

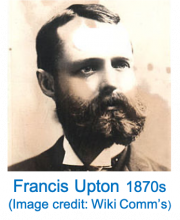

Edison built a technically-skilled staff. Most important among them was Francis Upton, Princeton/Berlin mathematician-physicist. Edison had a great use for scientific data and expertise—Upton had studied under physicist Hermann von Helmholtz, and now did research and experimentation on lamps, generators and wiring systems. He was invaluable to Edison by, as Hughes describes, “bringing scientific knowledge and methods to bear on Edison’s design ideas.”

Edison built a technically-skilled staff. Most important among them was Francis Upton, Princeton/Berlin mathematician-physicist. Edison had a great use for scientific data and expertise—Upton had studied under physicist Hermann von Helmholtz, and now did research and experimentation on lamps, generators and wiring systems. He was invaluable to Edison by, as Hughes describes, “bringing scientific knowledge and methods to bear on Edison’s design ideas.”

Another lab assistant later described Upton as the thinker and “conceptualizer of systems”:

It was Upton who coached Edison about science and its uses in solving technical problems; it was Upton who taught Edison to comprehend the ohm, volt, and weber (ampere) and their relation to one another. Upton, for instance, laid down the project’s commercial and economic distribution system and solved the equations that rationalized it. His tables, his use of scientific units and instruments, made possible the design of the system.

As discussed earlier, the key insight for the lighting-and-power project was the importance of using high-resistance filaments in the incandescent lamps. Edison only got to this insight with Upton’s crucial help. Upton had helped Edison understand Joules’ and Ohm’s fundamental laws of electronics, which show the relationships between current and resistance. They needed to reduce the energy lost; using these relationships, they saw from Joule’s law that they could reduce the energy lost if, instead of using more copper, they reduced the current. Bringing in Ohm’s law––resistance equals voltage divided by current––meant that increasing resistance, at a given level of voltage, would proportionately reduce current. As historian David Billington explains[2]:

Edison’s great insight was to see that if he could instead raise the resistance of the lamp filament, he could reduce the amount of current in the transmission line needed to deliver a given amount of power. The copper required in the line could then be much lower.

Thus, as Israel continued:

Edison realized that by using high-resistance lamps he could increase the voltage proportionately to the current and thus reduce the size and cost of the conductors.

These relationships of current to resistance are elementary to an electrical engineer today, but without Upton and Edison thoughtfully applying these laws of electronics in the late 1870s, it is doubtful they would have arrived at the key conclusion about resistance. Without that insight, the electric lighting-and-power project would have failed. Numerous factors helped the success of that original system, and that of the early GE. Most crucial, however, was Edison and Upton’s science-based approach.

That approach was also important in other innovations discussed above, such as:

- Steinmetz’ law of hysteresis and other mathematical and engineering insights into working with AC and resolving its obstacles

- Langmuir’s scientific exploration and understanding of how vacuum tubes work, leading to the high-vacuum tube and contributing to the Coolidge X-ray tube

- Coolidge’s developed deep knowledge of tungsten and his skill in applying this understanding to both lamp filaments and then design of his X-ray tube

- And as Bottomley writes, the MRI team’s “enormous physics and engineering effort that it took to transform NMR’s 1960’s technology–which was designed for 5-15 mm test tube chemistry–to whole body medical MRI scanners”

Another example (as discussed in the section on “synergistic product-innovation,” later in this Part One), is the initial development of the steam turbine for electric power generation, and then its later reapplication to aviation. That first innovation––for electric power––obviously required scientific and engineering knowledge. The later reapplication of that turbine technology to aviation was arguably an even more creative, complex innovation. Scientific understanding of combustion, air-compression, thrust, and other principles had to be combined and integrated.

Finally, the power of GE’s science-based approach to product innovation was well illustrated in its plastics business. From about 1890 to the 1940s, GE needed plastics as electrical insulation––non-conducting material––to protect its electrical products from damage by electrical current. For the most part during these years, the company did not treat plastics as an important business opportunity, or an area for major innovation.

However, by the 1920s the limitation of insulation was becoming increasingly problematic. Many plastics are non-conductive because they have high electrical resistance, which decreases with higher temperature. As power installations expanded and electrical motors did more work, they produced more heat; beyond about 265˚ Fahrenheit (later increased to about 310˚ F), insulation materials would fail. Equipment makers and customers had to use motors and generators made with much more iron and copper––thus higher cost––to dissipate the heat.

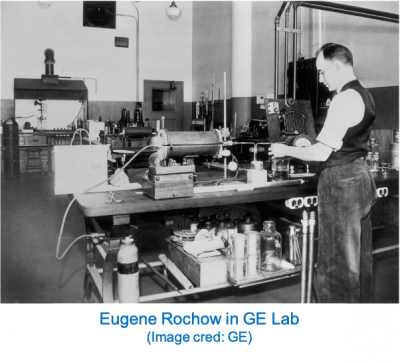

Then, in 1938-40, brilliant GE chemist Eugene Rochow created a clearly superior, GE-owned solution to this high-temperature insulation problem––a polymer raising the limit to above 356˚ F. It also proved useful in many other applications––sealants, adhesives, lubricants, personal-care products, biomedical implants, and others, and thus became a major business for GE.

Rochow’s success was the first major application of a rigorously science-based approach to product-innovation in GE plastics. This breakthrough traced to insights resulting from his deep knowledge of the relevant chemistry and his application of this understanding to find non-obvious solutions. This success gave GE confidence to take plastics-innovation more seriously, going well beyond insulation and electrical-products support. The science-based approach would be used again to create at least three more new plastics.

In 1938, Corning Glass Works announced a high-temperature electrical insulator called silicone––pioneered years earlier by English chemist Frederick Kipping. Corning hoped to sell glass fibers in electrical insulation. They asked GE––with whom they had previously collaborated––to help them find a practical manufacturing method for the new polymer. Based on this history, they would later complain that Rochow and GE had stolen their innovation.

In 1938, Corning Glass Works announced a high-temperature electrical insulator called silicone––pioneered years earlier by English chemist Frederick Kipping. Corning hoped to sell glass fibers in electrical insulation. They asked GE––with whom they had previously collaborated––to help them find a practical manufacturing method for the new polymer. Based on this history, they would later complain that Rochow and GE had stolen their innovation.

In reality, Rochow thought deeply about the new polymer, and with his science-based approach realized that it had veryhigh-temperature insulation-potential. However, he also concluded that Corning’s specific design would lose its insulating strength with its––very likely––extended exposure to very high temperatures. Rochow then designed a quite different design for silicone that avoided this crucial flaw.

To illustrate Rochow’s science-based approach, following is a partial glimpse of his thought process. He was an inorganic chemist (unlike the mostly organic chemists in GE and Corning). A silicone polymer’s “backbone” chain is inorganic––alternating silicon and oxygen atoms, no carbon. As was desirable, this structure helps make silicones good insulators, even at high temperatures. However, for product-design flexibility, most silicones are also partly organic––they have what are called organic “side groups” of carbon atoms, attached to silicon atoms. The Kipping/Corning’s side groups were known as ethyl phenyl. As Rochow recounted in his 1995 oral history:

I thought about it. “Ethyl groups—what happens when you heat ethyl phenyl silicone up to thermal decomposition [i.e., expose it to very high temp]? Well, it carbonizes. You have carbon-carbon bonds in there… You have black carbonaceous residue, which is conducting. [i.e., the silicone will lose its insulating property]. How can we avoid that? Well, by avoiding all the carbon-carbon bonds. What, make an organosilicon derivative [e.g., silicone] without any carbon-carbon bonds? Sure, you can do it. Use methyl. Kipping never made methyl silicone. Nobody else had made it at that time, either. I said, in my stubborn way, ‘I’m going to make some methyl silicone.’”

Rochow did succeed in making methyl silicone and demonstrated that it delivered a clearly superior value proposition for high-temperature electrical insulating. In contrast to the Corning product, this GE silicone maintained its insulating performance even after extended high-temperature exposure. Corning filed patent interferences, losing on all five GE patents. It also proved valuable in other applications––sealants, adhesives, lubricants, personal-care products, biomedical implants, and others. It took ten years to be profitable, but silicones became a major category, making GE a leading plastics maker. The same fundamentally science-based approach applied by Rochow would pay off again repeatedly with new plastics innovations in the rest of GE’s first century.

In 1953 chemist Daniel Fox, working to improve GE’s electrical-insulating wire enamel, discovered polycarbonates (PC). This new plastic showed excellent properties[3]––more transparent than glass, yet very tough––thus, it looked like acrylic but was much more durable. It was also a good insulator, heat resistant, and able to maintain properties at high temperatures––and became a very large-volume plastic.[4]

This discovery is often described as accidental. Luck was involved, as Fox had not set out intending to invent a material like PC. However, his science-based instinct led to the discovery. PC were in the “carbonates” category, long known to easily hydrolyze––break down by reaction with water; thus, researchers had given up on carbonates as unusable. Fox, however, remembered that as a graduate student he had once encountered a particular carbonate that, as part of an experiment, he needed to hydrolyze––yet, hard as he tried, he could not induce it to hydrolyze. Following that hint, he explored and found, surprisingly, that PC indeed do not hydrolyze; thus, they could be viable as a new plastic.

Asked in 1986 why no one else discovered PC before him, the first reason he gave was that “…everyone knew that carbonates were easily hydrolyzed and therefore they wouldn’t be the basis for a decent polymer.” Fox described himself as an “opportunist.” Not necessarily following rote convention, he was open to non-obvious routes, even ones that “everyone knew” would not work. Fox recounted how one of his favorite professors, “Speed” Marvel at Illinois, “put forth a philosophy that I remember”:

For example, he [Marvel] told the story of a couple of graduate students having lunch in their little office. One of them ate an apple and threw the apple core out the window. The apple core started down and then turned back up[5]. So, he made a note in his notebook, “Threw apple core out the window, apple core went up,” and went back to reading his magazine. The other man threw his banana peel out the window. It started down and then it went up. But he almost fell out of the window trying to see what was going on. Marvel said, “Both of those guys will probably get Ph.D.’s, but the one will be a technician all of his life.”

I remember that. First you must have all the details, then examine the facts. He that doesn’t pursue the facts will be the technician, Ph.D. or not.

Fox’s insightful, if lucky, discovery proved to be a watershed for GE. Following the major silicones and PC successes, GE was finally convinced that it could––and should aggressively try to––succeed in developing major new plastics, even competing against the chemical giants. Applying the same science-based approach, GE produced the first synthetic diamonds and later, two additional major new products––Noryl and Ultem. These two made important improvements in high heat-resistance, among other valuable experiences, at lower costs than some other high performance plastics.

GE thus helped create today’s plastics era, which delivers low costs and functional or aesthetic benefits. On the downside, as the world has increasingly learned, these benefits are often mixed with an unsettling range of environmental/safety threats. The company was unaware of many of these threats early in its plastics history. Still, it regrettably failed to aggressively apply its science-based capabilities to uncover––and address––some of these threats earlier, at least after 1980. Nonetheless, during GE’s first century, its science-based approach was instrumental in its great record of product innovation.

Of course, most businesses today use science to some degree and claim to be committed to product (or service) innovation. However, many of these efforts are in fact primarily focused on marginal improvements––in costs, and in defects or other undesired variations––but not on fundamental improvements in performance of great value to customers. After its first century, GE––as discussed later in Parts Two-Four of this series––would follow that popular if misguided pattern, reducing its emphasis on science-based breakthrough innovation, in favor of easier, but lower-impact, marginal increases in cost and quality. Prior to that shift, however, science-based product-innovation was a second key aspect––beyond its customer-focused approach––of GE’s first-century product innovation.

Synergistic product innovation

Thus, the two key strategic principles discussed above were central to GE’s product-innovation approach in that first century––it was customer-focused and science-based. An important third principle was that, where possible, GE identified or built, and strengthened synergies among businesses. These were shared strengths that facilitated product-innovation of value to customers.

In GE’s first century, all its major businesses––except for plastics––were related to and synergistically complementary with one or more other generally electrically-rooted GE businesses. Thus, though diverse, its (non-plastics) businesses and innovations in that first era were related via electricity or other aspects of electromagnetism, sharing fundamental customer-related and science-based knowledge, and understanding. This shared background and perspective enabled beneficial, synergistically shared capabilities. These synergies included sharing aspects of the businesses’ broadly defined value propositions and sharing some technology for delivering important experiences in those propositions.

First and perhaps most obvious example of this electrical-related synergy was the Edison electric lighting-and-power system. GE’s providing of electrical power was the crucial enabler of its lighting, which in turn provided the first purpose and rationale for the power. Later, expanding this initial synergistic model, GE would introduce many other household appliances, again both promoting and leveraging the electric power business. These included the first or early electric fan, toaster, oven, refrigerator, dishwasher, air conditioner, and others.

Many of these GE products, more than major breakthrough-innovations, were fast-followers of other inventors eager to apply electricity. An exception, Calrod––electrically-insulating but heat-conducting ceramic––made cooking safer. Without a new burst of innovation, GE appliances eventually became increasingly commoditized. Perhaps that decay was a failure of imagination more than inevitable in the face of Asian competition as many would claim later. In any case, appliances were nonetheless a large business for all of GE’s first century, thanks to its original synergies.

Another example of successful innovation via electrical or electromagnetic synergy was GE’s efforts with radio. The early radio transmitters of the 1890s generated radio-wave pulses, only able to convey Morse code dots-and-dashes––not audio. In 1906, recognizing the need for full-audio radio, a GE team developed what they termed a “continuous wave” transmitter. Physicists had suggested that an electric alternator––a device that converts DC to AC––if run with high enough cycle-speed, would generate electromagnetic radiation. It thus could serve as a continuous-wave (CW) radio transmitter.

GE’s alternator-transmitter seemed to be such a winner that the US government in 1919 encouraged the company’s formation of Radio Corporation of America––to ensure US ownership of this important technology. RCA started developing a huge complex for international radio communications, based on the giant alternators. However, this technology was abruptly supplanted in the 1920s by short-wave signals’ lower transmission cost, enabled by the fast-developing vacuum-tube technology. Developing other radio-related products, RCA still became a large, innovative company, but anti-trust litigation forced GE to divest its ownership in 1932.

Nonetheless, the long-term importance of GE’s CW innovation was major. This breakthrough––first via alternators, then vacuum-tube and later solid-state technology––became the long-term winning principle for radio transmission. Moreover, GE’s related vacuum-tube contributions were major, including the high-vacuum. And, growing out of this radio-technology development, GE later made some contributions to TV development.

However, beyond these relatively early successes, a key example of great GE synergy in that first century is the interaction of power and aviation. Major advances in flight propulsion––creating GE’s huge aviation business––were enabled by its earlier innovations, especially turbine technology from electric power but also tungsten metallurgy from both lighting and X-ray tubes. Moreover, this key product-innovation synergy even flowed in both directions, as later aviation-propulsion innovations were reapplied to electric power.

Shortly after steam turbines replaced steam engines for power generation, gas turbines emerged. They efficiently converted gas into great rotational power, first spinning an electrical generator. During the First World War, GE engineer Sanford Moss invented a radial gas compressor, using centrifugal force to “squeeze” the air before it entered the turbine. Since an aircraft lost half its power climbing into the thin air of high altitudes, the US Army heard of Moss’s “turbosupercharger” compressor and asked for GE’s help. Moss’ invention proved able to sustain an engine’s full power even at 14,000 feet altitude. GE had entered the aviation business, producing 300,000 turbo superchargers in WWII. This success was enabled by GE’s knowledge and skills from previous innovations. Austin Weber in a 2017 article quotes former GE exec Robert Garvin:

“Moss was able to draw on GE’s experience in the design of high rotating-speed steam turbines, and the metallurgy of ductile tungsten and tungsten alloys used for its light bulb filaments and X-ray targets, to deal with the stresses in the turbine…of a turbo supercharger.”

GE’s delivery of a winning value proposition to aviation users––focused on increased flight-propulsion power, provided by continued turbine-technology improvements––would continue and expand into the jet engine era. Primary focus would be on military aviation, until the 1970s when GE entered the commercial market. In 1941, Weber continues, the US and British selected GE to improve the Allies’ first jet engine––designed by British engineer Frank Whittle. GE was selected because of its “knowledge of the high-temperature metals needed to withstand the heat inside the engine, and its expertise in building superchargers for high-altitude bombers, and turbines for power plants and battleships.”

In 1942, the first US jet was completed with a GE engine I-A, supplanted in 1943 by the first US production jet engine––J31. Joseph Sorota, on GE’s original team, recounted the experience, explaining that the Whittle engine “was very inefficient. Our engineers developed what now is known as the axial flow compressor.” This compressor is still being used in practically every modern jet engine and gas turbine today. In 1948, as GE Historian Tomas Kellner writes, GE’s Gerhard Neumann improved the jet engine further, with the “variable stator”:

In 1942, the first US jet was completed with a GE engine I-A, supplanted in 1943 by the first US production jet engine––J31. Joseph Sorota, on GE’s original team, recounted the experience, explaining that the Whittle engine “was very inefficient. Our engineers developed what now is known as the axial flow compressor.” This compressor is still being used in practically every modern jet engine and gas turbine today. In 1948, as GE Historian Tomas Kellner writes, GE’s Gerhard Neumann improved the jet engine further, with the “variable stator”:

It allowed pilots to change the pressure inside the turbine and make planes routinely fly faster than the speed of sound.

The J47 played a key role as engine for US fighter jets in the Korean war and continued important for military aircraft for another ten years. It became the highest volume jet engine, with GE producing 35,000 engines. The variable stator innovation resulted in the J79 military jet engine in the early 1950s, followed by many other advances in this market.

In the 1970s, the company successfully entered the commercial aviation market, and later became a leader there as well. Many improvements followed, with GE aviation by 1980 reaching about $2.5 billion (about 10% of total GE).

GE’s application to aviation––of turbine and other technology first developed for the electric power business––brilliantly demonstrated the company’s ability to capture powerful synergies, based on shared customer-understanding and technology. Then in the 1950s as Kellner continues:

The improved performance made the aviation engineers realize that their variable vanes and other design innovations could also make power plants more efficient.

Thus, GE reapplied turbine and other aviation innovations––what it now terms aeroderivatives––to strengthen its power business. The company had completed a remarkable virtuous cycle. Like symbiosis in biology, these synergies between two seemingly unrelated businesses were mutually beneficial.

* * *

Therefore, in its first century GE clearly had great success proactively developing and using synergies tied to its electrical and electromagnetic roots. As mentioned at the outset of this Part One post, this synergistic approach was the third strategic principle––after customer-focused and science-based––that GE applied in producing its product-innovations during that era. However, while the dominant characteristic of GE’s first century was its long line of often-synergistic product-innovations, that does not mean that GE maximized all its opportunities to synergistically leverage those key roots.

Perhaps an early, partial example was radio, where GE innovated importantly but seemed to give up rather easily on this technology, after being forced to divest RCA. Instead, GE might well have moved on and tried to recapture the lead in this important electrical area.

Then, after 1950 GE again folded too easily in the emerging semiconductor and computer markets. Natural fields for this electrical-technology leader, these markets would be gateways to the vastly significant digital revolution. In the 1970s, or later under Welch, GE still could have gotten serious about re-entering these important markets. It’s hard to believe that GE could not have become a leading player in these if it had really tried. Moreover, beyond missing some opportunities for additional electrically-related synergies, GE also drifted away from strategic dependence on such synergies, by developing plastics. Profitable and rapidly growing, plastics became GE’s sole business not synergistically complementary with its traditional, electrically-related ones.

These probably missed opportunities, and modest strategic drifting, may have foreshadowed some of GE’s future, larger strategic missteps that we will begin to explore in Part Two of this series. Thus, we’ve seen that GE displayed some flaws in its otherwise impressive synergistic approach. Overall, nonetheless, the company’s product-innovation approach and achievements in its first century were largely unmatched. Although later essentially abandoned, this value-delivery-driven model should have been sustained by GE and still merits study and fresh reapplication in today’s world.

In any case, however, by the 1970s GE needed to rethink its strategy. What would be the basis for synergistically connecting the businesses, going forward? How should the company restructure to ensure that all its non-plastics businesses had a realistic basis for mutually shared synergies, allowing it to profitably deliver superior value?

In answering these questions, GE should have redoubled its commitment to customer-focused, science-based product-innovation, supported by synergies shared among businesses. Using such a strategic approach, although GE might have missed some of the short-term highs of the Welch era, it could have played a sustained role of long-term leadership, including in its core energy markets. However, as GE’s first century wound down, this astounding company––that had led the world in creative, value-delivering product-innovation––seemed to lack the intellectual energy to face such questions again.

Instead, as we will see, the company seemed to largely abandon the central importance of product-innovation, growing more enamored of service––profitably provided on increasingly commoditized products. GE would pursue a series of strategic illusions––believing that it controlled its own destiny, as Jack Welch urged––but would ultimately fail as the company allowed the powerful product-innovation skills of its first century to atrophy. So, we turn next to the Welch strategy––seemingly a great triumph but just the first and pivotal step in that tragic atrophy and decline. Thus, our next post of this GE series will be Part Two, covering 1981-2010––The Unsustainable Triumph of the Welch Strategy.

Footnotes––GE Series Part One:

[1] By this time, some street lighting was provided by electric-arc technology, far too bright for inside use

[2] David P. Billington and David P. Billington Jr., Power, Speed, and Form: Engineers and the Making of the Twentieth Century (Princeton: Princeton University Press, 2006) 22

[3] But unfortunately, is made with bisphenol A (BPA) which later became controversial due to health issues

[4] Discovered the same time by Bayer, the two companies shared the rewards via cross-licensing

[5] Presumably meaning it stopped and flew back up, above the window